Five Trends in Enterprise Data 2023

Author: Stanislav Makarov

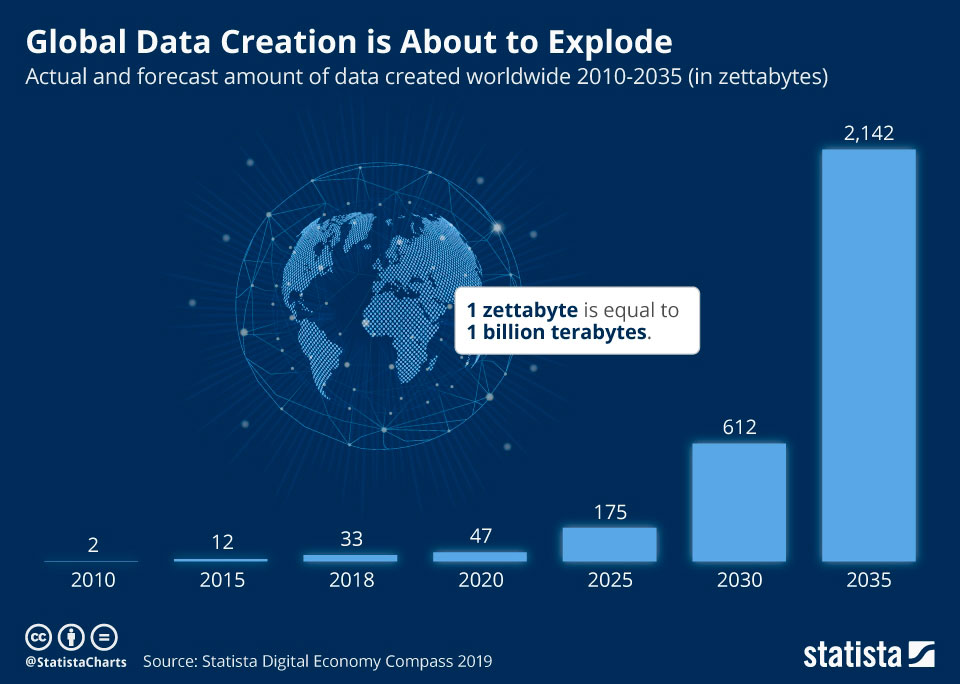

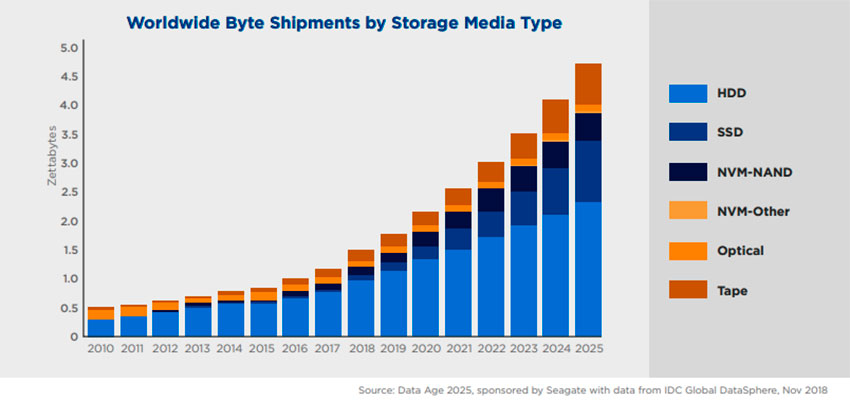

According to IDC Worldwide Global Forecast, by 2025 the total amount of business data will reach 175 zettabytes. For reference: one zettabyte is equal to one billion terabytes. Have you tried to picture it? I couldn't. And this is just the beginning, the plot thickens. When you see a picture like this, you are tempted to ask yourself how to store and work with this stuff. It seems that very soon all businesses will face the task of full technical re-equipment, the purchase of additional servers is not enough. Big Data is coming from where you didn't expect it to come from.

Source: Statista.com

Data is growing at an incredible rate. According to John Ridning, vice president of research at IDC Global DataSphere, which measures the amount of new data created, collected, replicated and consumed each year, "The Global DataSphere is expected to more than double in size from 2022 to 2026. Enterprise DataSphere will grow more than twice as fast as Consumer DataSphere over the next five years, further forcing enterprise organizations to manage and protect the world's data, creating data activation opportunities for business and society."

The IDC Global DataSphere study also documented that "64.2 zettabytes of data were created or replicated in 2020," and predicted that "the global rate of data creation and replication will have a compound annual growth rate (CAGR) of 23 percent over the projected 2020-2025 period." At that rate, more than 180 zettabytes will be created in 2025 - that's 180 billion terabytes.

1. Big Data came and stayed with us

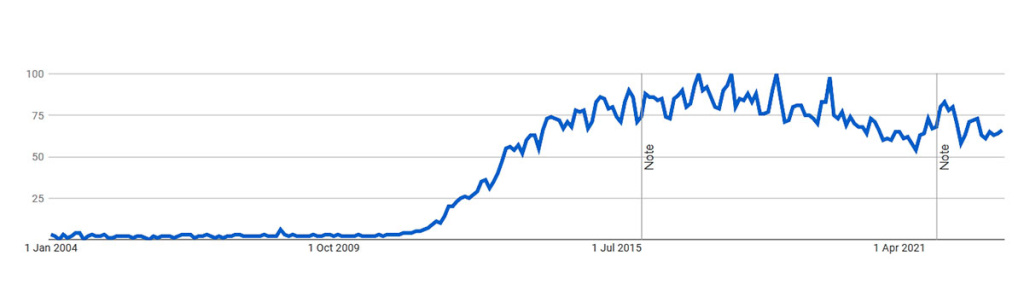

We thought Big Data was a hype, and it will no doubt go away soon. It's time to admit that we were wrong. Big Data came to stay with us forever. But now you can talk about your corporate Big Data projects without that awkward feeling, like you're implementing blockchain or something new-fangled just to show off. Google Trends convincingly shows that there was indeed a hype, and there was a decline, but when it ended, interest in the technology did not fade, but remained at a high level.

Source: Google.com

In short, Big Data is no longer a trend, but a fait accompli. That is why this section was given the number "zero" in order to emphasize that today's culture of working with corporate data is built on the basis of the ideas that were voiced in the concept of big data, and it does not matter how big your data really is.

- What about trends? - The current trends are essentially embodiments of certain aspects of the Big Data concept. Remember that marketing play on words that Big Data is the three "V's": Volume, Velocity and Variety? Then they added Veracity and Value, and it became five. Let's not get carried away with formalism and fitting facts to fit this pattern, but we have to admit that the marketers were right about a lot of things.

So let's look at current data trends.

2. Real time is getting real.

In former slow times, business was quite happy with monthly or even quarterly reports for operational management of the enterprise. Mostly the developers of on-board systems and industrial automation knew about systems like QNX, Nucleus RTOS, VxWorks and similar real-time systems, while in a common managerial work such a subtle sense of time was not needed.

Total digitalization made it possible to get any data hypothetically instantly. Businesses sensed this and began to demand from IT that any report be on their screen at the click of a mouse - for now they agree to the click of a mouse, but soon they will want it at the snap of a finger. (That said, it's unclear why the rituals of printing out and submitting the same monthly and quarterly reports to the chief executive for signature are being preserved, but I don't think that won't last.)

Managers now want to work in real time so that corporate systems can respond to business events with almost zero delay. And not just to learn about the fact that a sale has occurred in a particular chain store, but to analyze all sales and make decisions on the fly. For example, to take a discount and cancel it, if the goods are already well taken. Or, on the contrary, to immediately drop the price if the product is the risk of not selling before the expiration date. It looks like many industries will soon be operating at the same pace as traders in the stock market, when it counts in milliseconds.

There is even a special term, Real-time business intelligence (RTBI), a concept that describes the process of providing business intelligence (BI) or information about business operations as they occur. RTBI doesn't just allow you to see transactions quickly; it's been possible for a long time. Online analytics allows you to initiate corrective actions and change settings to optimize business processes.

Why does everyone suddenly need this speed? - There are two main reasons. First, many businesses have gone online. These are banks and telecom, e-commerce and retail (although the line between them has almost disappeared), cab and other transport, this also includes public services. Everyone wants to know everything, everywhere at once. Here we have dynamic pricing, and marketing campaigns, and payments and cash control, and so on.

The second impetus for accelerating data analysis is, let's say cautiously for now, the beginning of widespread penetration of IoT solutions in various industries. Sensors typically don't generate very large data packets. For example, a cab or a trolleybus just transmits its coordinates, latitude and longitude - just a few digits. But there are a lot of sensors, and they are legion, and on this scale, you can't go far with an ordinary client-server. Therefore, message brokers, column databases, and other things that used to be considered exotic, have firmly established themselves in the corporate landscape.

3. Dataviz - looking at the beautiful

Data visualization, or as it is now fashionable to say, "dataviz", short for "Data Visualization", is perhaps the second most important trend. Otherwise, "all that we have worked so hard for" will remain at the bottom of your data lakes, no matter how beautiful they are.

Unfortunately, people are not adapted to perceive the flow of numbers directly with their senses. But the human brain can process visuals 60,000 times faster than text - and even more so numbers. It is also able to recognize trends, identify potential problems and predict future developments using visual representations of data, such as graphs, charts and so on. It would be wise to use this property, wouldn't it?

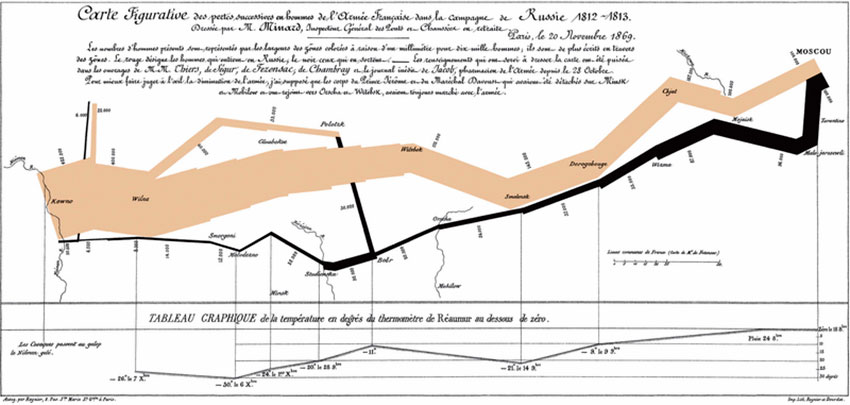

The problem is not new; data scientists have long been racking their brains over how to cram a lot of numbers into a single picture so that everything makes sense at a glance. Like this famous chart by the father of infographics, Charles Joseph Minard, showing Napoleon's campaign to Russia. The thick bar shows the size of his army at certain geographic points during the offensive and retreat. It also shows five other types of data: distance traveled, temperature, latitude and longitude, direction of travel, and dates. It couldn't be clearer.

Source: Wikipedia.org

Alas, dashboards that are so popular with С-level management today can rarely boast of such a filigree elaboration, so that the situation in the business could be seen crystal clear. The problem, as always, is at the junction - on the one hand, you need to have a good understanding of the nature and meaning of your data, on the other - to have strong competencies in UX to present this data visually. These aspects are rarely combined, so corporate dashboards have endless traffic lights and arrow indicators, like speedometers, which show only the unclear things.

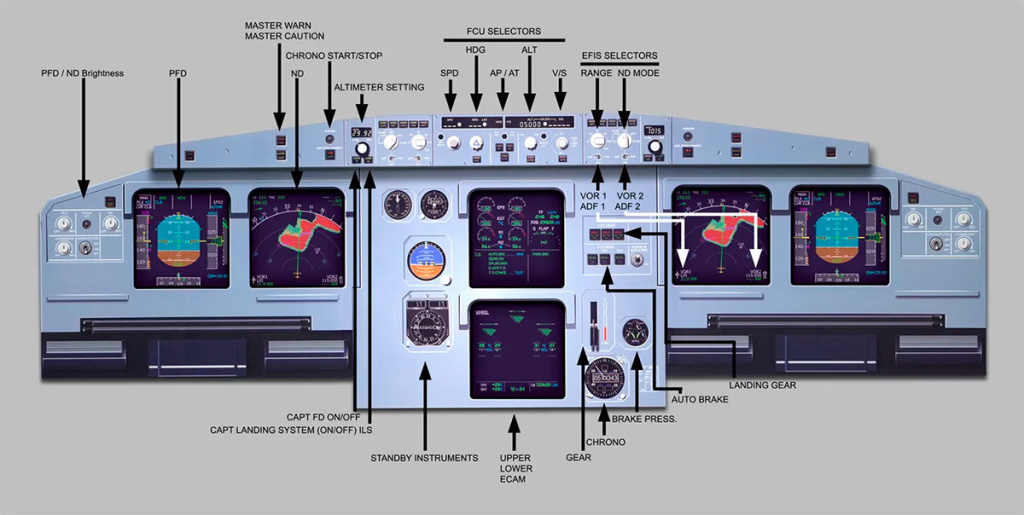

At the same time, they usually cite aircraft dashboards as an example - look at how many sensors they have! But they forget that pilots have to study for several years to figure it all out and retrain for each new model. Where have you seen a CEO who has spent at least a couple of weeks learning how to use the dashboard?

But slowly the process is underway. Companies are increasingly beginning to recognize the functional value of aesthetics and, as a result, are paying more attention to user interface design in reports and dashboards. Their goal is not just to match the corporate identity, but more importantly, to improve usability and make the data easier to understand. And, by the way, modern aircraft dashboards have become much more concise. This is what the main control panel of the Airbus A320 liner looks like:

Source: https://pmflight.co.uk/free-airbus-cockpit-posters/

And how can you not remember the expression of the legendary Tupolev: "Unattractive planes don't fly" - if the corporate dashboard looks terrible, your business won't fly either.

- So what's the big deal? - the reader will say. - At worst, it's wasted money on developing the dashboard. Corporations don't spend that kind of money inefficiently, either.

In fact, there is a more dangerous trend: ignoring data when making management decisions. The volume of information is growing, and its structure is becoming more complex. And you cannot fully delegate the task of data analysis to your data scientists, because in the end you won't believe them anyway.

The Economist Intelligence Unit surveyed several hundred international executives about their decision-making processes, and only 10% of those surveyed said their decisions were based mostly on intuition, while the rest said they made decisions based on data analysis. Seems like a good thing, doesn't it? However, when asked how they would make a decision if the data contradicted their gut feeling, only 10% said they would agree with the data. Simply put, nine out of ten executives would find a way to ignore the data if it went against their gut feeling.

A paradox? - Not at all! It's a normal property of human nature to reject anything that doesn't fit into your picture of the world. Even when business leaders trust their data analysis teams, it's frustrating to accept advice without a full understanding and appreciation of the mechanisms that lead to those conclusions.

- So what to do? - you ask. - Oh, well, nothing; the invisible hand of the marketplace will put everything in its place. Executives who ignore the data will die out like dinosaurs. Not physically, of course - now the processes of technology development go faster than the change of generations. And the rest will have to develop competence in data science at least to the level of clearly formulating their requirements to the same dashboards.

By the way, dashboards are not the only thing that matters. Data visualization is a broader area, and it includes regular reports, which are increasingly becoming infographics, which is also a good thing. The main difference between basic data visualization in the form of reports and dashboard data visualization is the frequency of data updates. But this distinction is gradually blurring, reports are becoming interactive and updatable.

There is another trend worth mentioning - animation and video. Due to evolution, moving pictures brain reacts faster than static, otherwise our primitive ancestors would have been eaten by wild animals - and not without reason the red light on the same dashboard is always blinking. But for a long time, all animation was seen as an unnecessary embellishment and avoided in business applications. With the abundance of data that we have, it is no longer enough just to highlight the important things with color or to show the dynamics of changes, it is necessary to show data in motion. But this is a separate science, which has to be mastered, otherwise you will just have an incomprehensible flicker on the screen.

According to Fortune Business Insights, the global data visualization market is projected to reach $19.20 billion in 2027, with a compound annual growth rate of 10.2% over that period. And there could be more - the boundaries of this segment are hard to pin down.

4. The ubiquitous AI comes to the rescue

The AI (artificial intelligence) has penetrated wherever it is possible and even impossible. It is not without its presence in data processing, which is, in fact, what it was created for, except for the purpose of taking over the world and destroying mankind. Recently, the introduction of AI in business is usually understood as the use of neural networks, including the analysis of customer actions, demand forecasting, optimization of production processes and the like. But to talk about it today as an innovation would be mauvais ton - these technologies, though not yet completely routine, are already common knowledge. Also everyone knows where the rake is laid out: first of all you need a marked dataset (which is quite troublesome), on which you train your neural network, then test operation, retraining, retraining again. And maybe it will work.

But there is a better way! Of course, the first one that comes to mind is ChatGPT, which have become super-popular in early 2023. Within just two months of its launch, its audience had reached 100 million users, making it "the fastest-growing consumer app in history," according to a research report by investment bank UBS. By comparison, it took TikTok nine months to reach that milestone and Instagram about 2.5 years.

As he himself says, "Generic AI models that are already trained on big data can be applied to a variety of business problems. For example, you can use off-the-shelf models for time-series analysis, data classification, and so on." It can also be used to create intelligent assistants for representatives of all professions where work with texts is required - from a secretary/clerical worker to the author of fantasy novels. From a more down-to-earth - a solution based on ChatGPT wave can be put on the first line of support, it can certainly cope better than a dull IVR.

But ChatGPT and generative AI technology in general cannot be trusted with serious tasks, because it does not find, but invents an answer. Figuratively speaking, it can pass off its own hallucinations as true fact, and it will do so quite confidently. In the industrial sector, this defect can cause physical harm and traumatize people. In general, use with caution.

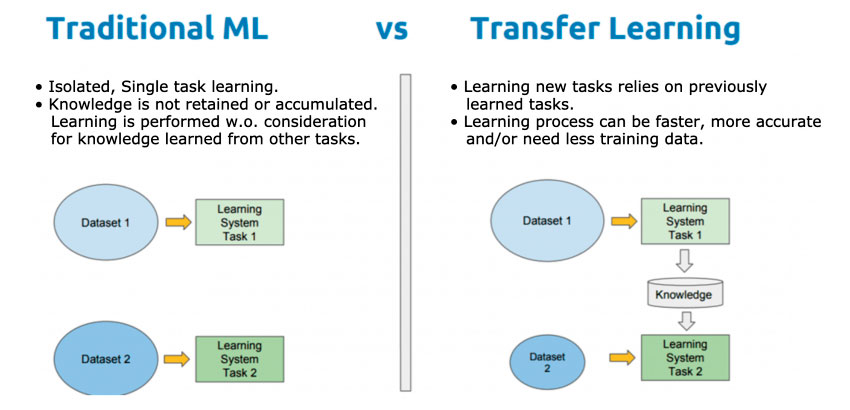

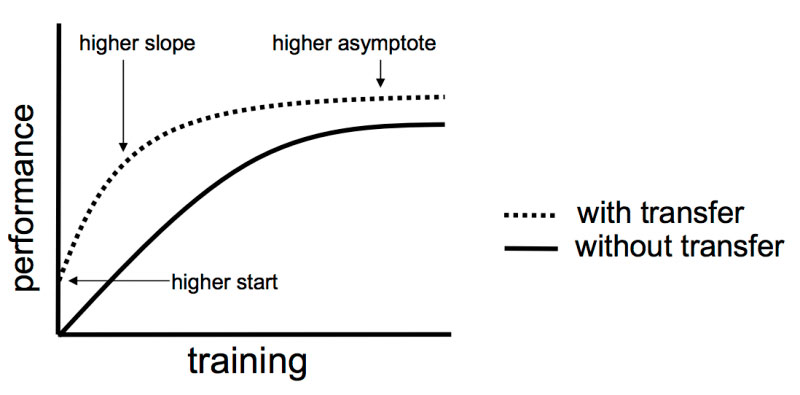

The current hype around generative AI bots has somehow overshadowed all other AI technologies that might be even more useful for specific tasks. The first thing worth mentioning is Transfer Learning (TL), a technique that allows you to use the knowledge gained from training models on one task to solve other tasks. Compared to traditional machine learning (Machine Learning, ML), transfer learning saves a lot of time.

https://www.v7labs.com/blog/transfer-learning-guide

For example, you need different models to detect trucks and buses in the images. With a normal ML approach, you would need two sets of data to train two different neural networks. Using transfer learning, you first create a model that identifies all self-driving carriages, and then use it to make models for recognizing trucks, buses, limousines, convertibles, tractors, gazelles, and so on. It's a bit like object-oriented programming, isn't it?

Source: https://machinelearningmastery.com/transfer-learning-for-deep-learning/

As a result, with transfer training we have a higher starting point, a faster exit to productivity, and a higher level of quality of work. Remember, there was such a thing as AlphaGo? - The neural network developed by DeepMind, which beat the strongest human players in go. And then they developed the AlphaZero neural network based on it, which, after training for just 24 hours, could beat the best among specialized chess, shogi, and go programs. This was in 2017, at that time the term Transfer Learning had not yet entered into use, and strictly speaking, it was arranged somewhat differently, but in general you can consider AlphaZero one of the pioneers of transfer learning. The effectiveness of this method is perhaps obvious.

Concluding this section, we can say that the use of AI technologies, and the latest and most advanced ones, is rapidly becoming mainstream in all aspects of working with corporate data. This raises a widely discussed topic that AI will deprive people of their jobs. Some straightforward jobs, like frontline support in call centers, could possibly be taken over by AI. However, in positions that involve personal responsibility, AI cannot be held accountable. It won't go to jail on your behalf. Similarly, for creative tasks, AI falls short. It can generate a hundred logo options faster than a designer, but only a human can choose the one that fits the corporate context. Replacing a programmer is also not feasible because it would first require replacing the business analyst who assigns tasks to the programmer. However, such changes would bring about massive transformations, and they would happen quickly. There is a high risk of missing this crucial moment and falling hopelessly behind.

5. Next-generation Data Storage

Futurologists and visionaries easily operate with petabytes, exobytes, zettabytes and other inordinate amounts of data. However every reasonable IT-director inevitably asks himself one practical question: where shall we put all this wealth and how much it will cost us?

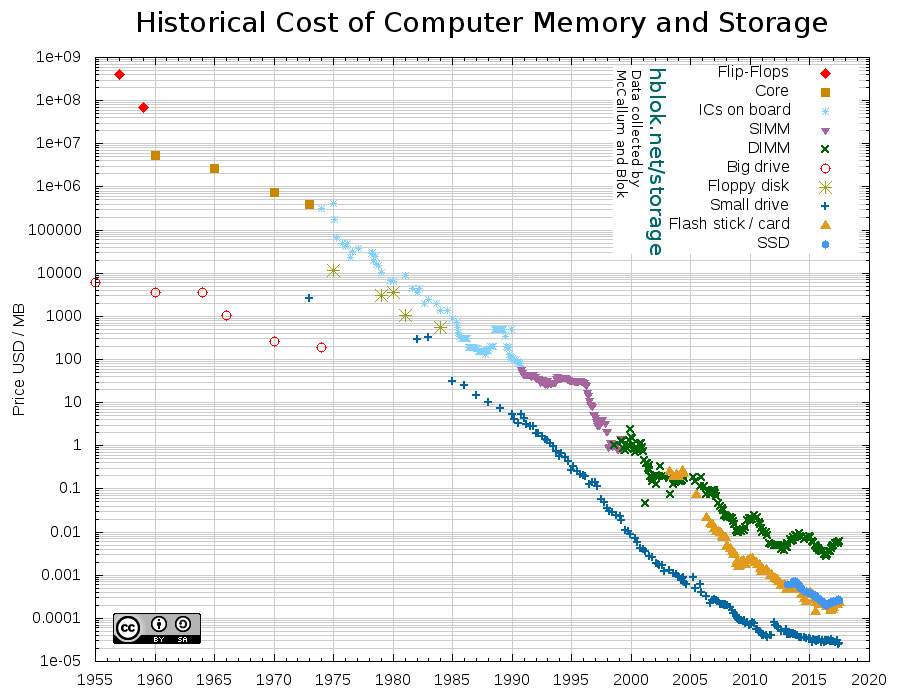

Currently, the average share of IT infrastructure costs is about 3-4% of the total budget of U.S. companies, and data storage systems can account for up to 30% of total IT costs (according to ChatGPT, let's leave it to his conscience, if he has one). That means about 1% of the total budget goes to storage. And if the volume grows by a factor of 100, it will be 100%? And then how does a business live? - Of course, the cost per megabyte is steadily decreasing, but will storage vendors have time to provide a decent response to the impending information explosion?

Source: https://hblok.net/blog/posts/2017/12/17/historical-cost-of-computer-memory-and-storage-4/

So far, there is no firm certainty. So far, traditional HDD/SSD vendors dominate the market and are not going to give up. In the fierce competition they improve the characteristics of their devices and reduce prices. Good old magnetic tapes are not going to fade away either, they are firmly entrenched in the corporate archives. Most LTO-7 and LTO-8 tape drive manufacturers have roadmaps for even higher capacity product lines.

Analysts predict that the next-generation storage market will continue to grow rapidly in the coming years. According to a MarketsandMarkets report, the market is expected to reach $81.0 billion by 2025, growing at an average of 16.7 percent annually.

Experts at the Advanced Storage Technology Consortium believe hard drive capacity will grow to 100TB by 2025 due to new recording technologies such as Shingled Magnetic Recording (SMR), Perpendicular Magnetic Recording (PMR), and Heat-assisted magnetic recording (HAMR). As of mid-2023, 16TB drives are already on the market, so this prediction looks realistic.

Source: Data Age 2025, sponsored by Seagate with data from IDC, 2018

As seen in 2018, there should have been enough space on the market for everyone. But the total figure of 5 zettabytes does not beat the forecast of 175 zettabytes for the same year 2025, given at the beginning of the article and dated 2019. It is clear that analysts often have their fingers in the sky with their forecasts, but there is definitely cause for concern - there is not enough capacity for everyone.

Joseph Schumpeter, the Austrian economist who coined the term "innovation," used to say, "You can put a hundred carriages in a row, but you still won't get a car. The same is with the disks: you cannot go far with the technologies of the last century. And, while the Seagate chief financial officer predicts that hard drives will last another 15 to 20 years, many companies are concerned that the volume of data is growing faster than they can store and analyze it, according to 62% of respondents surveyed by Ocient.

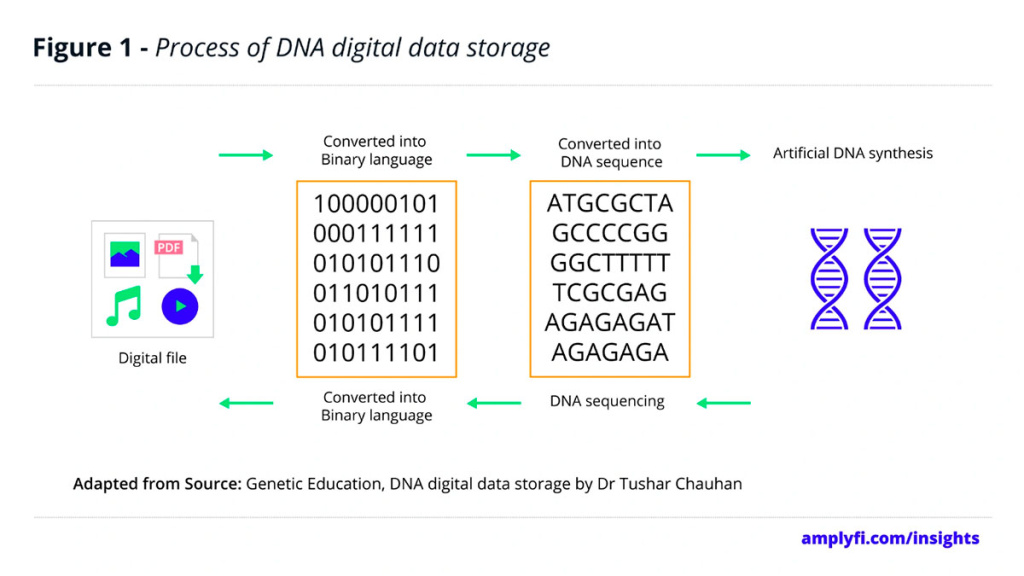

So where is the disruption, the powerful breakthrough that will solve the data storage problem? - Optimists are pinning their hopes on DNA storage, as fantastic as that sounds. DNA can store a staggering amount of information in an almost unimaginably small amount. For example, 33 zettabytes of information recorded in DNA would fit into a ping-pong ball, while all of Facebook's data would be packed into a poppy seed. DNA will never degrade. If stored in a cool, dry place, the files can last for hundreds of thousands of years. It's hard to believe, but that's what scientists say - and not just British scientists.

All that is left is to implement the technology and set up production. At the level of the idea, everything is simple - we encode the information by means of amino acids, synthesize DNA, and then read it. It was proven that this method works, but there is one hitch: in laboratory conditions, attaching one base to DNA takes about one second. Writing an archival file at that speed could take decades, but research is developing faster methods, including massively parallel operations that write to many molecules at once.

Source: https://amplyfi.com/2021/08/24/the-future-of-information-storage-dnas-use-for-storing-data/

One of the main issues holding this trend back is cost - fusion of 1 megabyte of data costs about $3,500. But research is ongoing, and some say the cost could go down to $100 per terabyte of data by 2024 (and it's written in January 2023). Another issue is the speed of writing to DNA, with a long held record of 200 MB per day. However, at the end of 2021, researchers increased that to 20 GB per day. This is still well below the tape recording rate: 1,440 GB per hour, but it's something.

All in all, the prospects for mass production of DNA storage devices are still not very clear. Currently, the technology is still in the research and development stage, and it can be expensive to use commercially. The market is not there yet, but it is nevertheless projected to grow at a compound annual growth rate of 65.8% through 2028. Well, we'll see!

6. There is never too much security

To emulate Mikhail Zhvanetsky, you could say: "There is business, there is data. There is data, there is risk. There are risks - there are leaks. There are leaks - no data. No data - no business. And continue: "More data, more risk."

What to do about it? Whether you like it or not, you have to invest in information security. Of course, not every leak is fatal for business, but they cause a lot of trouble.

According to a Deloitte survey conducted late last year, 34.5% of executives surveyed reported that their organizations' accounting and financial data had been targeted by cybercriminals in the past 12 months. Within that group, 22% had experienced at least one such cyber event, and 12.5% had experienced more than one. Unfortunately, the report doesn't specify whether these were actually targeted attacks, or whether someone in the accounting department accidentally opened a phishing email and caught a trojan. And in 2023, nearly half (48.8%) of executives expect an increase in the number and scope of cyberattacks targeting their organizations' accounting and financial data. Yet only 20.3 percent of respondents say their organizations' accounting and finance departments are working closely and consistently with their counterparts on cybersecurity.

Well, in general, this is nothing new - the weakest link in the security system is still the person, especially the user, who is not too competent and responsible. And it is obvious that this problem cannot be solved by purely technical means, even by implementing various draconian systems that control every step of an employee. You can create a stress for people, but you still won't have the security. There is no way out, people need to be taught, it is not enough to just tell them "do not open suspicious emails", you need practical exercises, simulation of attacks followed by debriefing and so on.

But, alas, the demand for such products and services on the entire IS market is simply minuscule. So far, they are trying to solve the problem, mainly by giving a new employee a sign-up sheet with information security instructions so that later one can say "we warned you".

Summing up this topic, it is possible to say that in the field of information security a U-turn toward the man is long overdue; the weakest link should be strengthened. However, we must admit that we are unlikely to see this soon. IS services prefer to play the role of a punishing sword for other employees in case of an incident - so it is quite natural that accountants, financiers, lawyers, economists, salespeople, marketers and all other office employees are not very keen on contacting IS-specialists.

Of the new trends in data protection in 2023, by far the most striking is the arrival of AI, and on both sides of the front: this technology is successfully used by both IS developers and cybercriminals.

As we know, one of ChatGPT's strengths is its ability to write code. Including malicious code. Of course, it won't write a complex system by itself, but often a developer just needs a little help. As a result, a lot of homegrown hackers came running, and during breaks between classes, they tried to break sites. This was not a significant threat, but it was notable. More advanced hackers could use it to write polymorphic malware, experts warn, and that would be worse. Attackers are also expected to use ChatGPT to create sophisticated and realistic phishing attacks. Gone are the bad grammar and strange sentence wording that were the tell-tale signs of a phishing scam. Chatbots will now mimic native speakers of any language and deliver targeted messages. ChatGPT is capable of seamless language translation, which will change the rules of the game for foreign adversaries.

But these are all known attack vectors, only by new means. There's something new: AI systems are becoming, not dare we say it, critical elements of the infrastructure, and are themselves in the crosshairs.

Artificial intelligence technology stores huge amounts of data, and then uses that information to generate answers to questions and prompts. And whatever is left in the chatbot's memory becomes "fair game" for other users. For example, chatbots can record one user's notes on any topic and then summarize that information or search for additional information. But if those notes contain sensitive data - such as an organization's intellectual property or confidential customer information - they end up in the chatbot library. The user no longer has control over the information. Perhaps it's all coming down to the fact that in corporations, requests for ChatGPT and its friends will be sent only through the first department. But, as you can imagine, it doesn't work like that in real life - we're back to the fact that we need to educate people, and bans alone will not solve the problem.

Even ChatGPT was attacked, in which hackers found a vulnerability in the Redis library that allowed them to see the chat history of other active users. It lasted a very short time and was quickly stopped, but it was a fact, so it is worth thinking both developers and users.

Looking on the bright side, IDC states that AI in the cybersecurity market is growing at a compound annual growth rate of 23.6% and will reach $46.3 billion in 2027.

Using AI technology helps recognize attacks, not just protect known vulnerabilities, and can make cybersecurity easier, more efficient and cheaper. The latter is especially important and there is a turning point - attack detection tools have become available not only to large organizations, but also to medium and small businesses, thanks to advances in AI and its commoditization.

Final

And finally - asked ChatGPT to name the next "five V's" for big data, to outline plans for the future. And this is what he came up with:

-

Visualization - refers to the ability of companies to present data in a clear and understandable way for decision making.

-

Validation - refers to the process of verifying the validity and accuracy of data before it is used.

-

Versioning - refers to creating and managing different versions of data and software.

-

Variability - refers to the variability of data and the ability of companies to adapt to these changes.

-

Volatility - refers to the variability of data and its tendency to change rapidly over time.

It has to be said, this is not unreasonable. Visualization is already in trends, validation is necessary as air - with the abundance of fakes that we see. Some systems have support for versioning, but perhaps we should take a broader look at this task. Variability looks somewhat philosophical, which is why it's on the trend list twice, but it's different kinds of variability: companies themselves change, data changes. While you're thinking about a question, no one needs the answer anymore - such are the realities of our dynamic world. All in all, the fun is just beginning.