Qwen QwQ 32B is out - a thoughtful model comparable to DeepSeek R1 and o1-mini

March 6, 2025

Chinese team Qwen has released the QwQ-32B thinking model under the Apache 2.0 open source license.

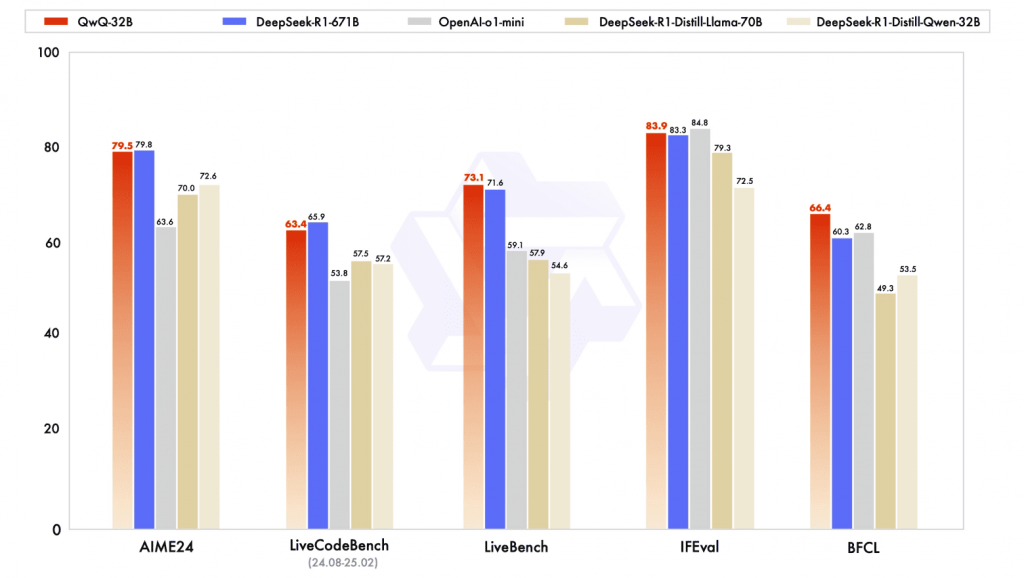

Despite its small size of 32B, the model is comparable in metrics to the giant DeepSeek R1 671B. The model also significantly outperforms the so-called distillates: DeepSeek-R1-LLama-70B (trained on Llama 70B thoughts) and DeepSeek-R1-Qwen-32B (trained on Qwen 32B thoughts)

According to the experiments conducted, the model answers quite well in Russian. It also correctly solved the "tricky" questions that neural network models usually incorrectly answer without reasoning, and which are often used in tests:

-

Which is greater - 3.11 or 3.9?

-

Olya has two brothers and three sisters. How many sisters does Olya's brother have?

Overall, the model looks very interesting - the 32B model can, in principle, be run on a home machine with decent speed, unlike the powerful but gigantic DeepSeek R1 671B.